Security Copilot: “Delivering Security AI in a Responsible Way”

Fresh from dazzling Microsoft 365 customers with the promise that creating reports, email, presentations, and spreadsheets will be much easier when Microsoft 365 Copilot appears in the Office apps, Microsoft launched another AI-powered Copilot on March 28. This time, the intention is to help security analysts make sense of the mass of signals that they must interpret to understand when attackers are active. As the headline goes, “Microsoft Security Copilot: Empowering defenders with AI.”

Although Microsoft 365 Copilot will no doubt affect how more people work (and create new administration and ethical challenges), I think the use case for Security Copilot is more obvious, if only because of the growing threat and catastrophic effect on companies if attackers compromise an infrastructure like a Microsoft 365 tenant. I can create my own reports without involving Microsoft 365 Copilot. Even after taking steps to harden a tenant and following my own advice to deploy MFA, conditional access policies, and so on, I might not have the skills and tools necessary to resist a determined attack leading to something like a “wiperware” attack.

According to Microsoft, they gather 65 trillion signals daily for threat intelligence. The signals come from products like Microsoft Defender for Office 365, Azure AD sign-in logs, Microsoft Sentinel, and Intune. Customers might very well ask how effective Microsoft is at interpreting those signals to find real threats when they see obvious spam get through to user inboxes. The fact is that the threat horizon changes constantly as attackers try new techniques to penetrate defenses. It takes time for the defenders to realize that hackers are trying something new, and that the altered or innovative technique can bypass the checks that should stop penetration.

It’s frustrating when a poorly-written email that’s a blatant attempt to hoodwink the recipient makes it through Exchange Online Protection, but it’s a symptom of the problem of having to make sense of the amount of data involved in detecting attacks.

How Security Copilot Might Help

Microsoft says that “Security Copilot can augment security professionals with machine speed and scale, so human ingenuity is deployed where it matters most.” In other words, the aim is for the AI to save time and focus security investigations on the most important issues. They describe three principles to explain what they’re trying to achieve.

- Simplify the complex. Some security incidents seem like looking for a needle in the proverbial haystack. Where do you start looking? Engaging in a natural language question-and-answer session with Security Copilot should be helpful, if those posing the questions ask the right ones.

- Catch what others miss. Even the best security sleuth can overlook something, including issues that are clear and obvious. Software is more pedantic and will examine everything but can overlook an item that a human will detect instantly, like spam. Microsoft says that Copilot “anticipates a threat actor’s next move with continuous reasoning based on Microsoft’s global threat intelligence.” I imagine that AI is better at figuring out what the 65 trillion daily signals mean when it comes to assessing threats than the average security professional, especially when the model is guided by analysts in areas like threat hunting and incident response. But we’ll have to wait and see how Security Copilot works when exposed to the real-world pressures of average tenants.

- Address the talent gap. No organization has enough security professionals to do everything. Using the continually-learning model of AI means that everyone gains from Copilot’s answers to security questions. The answers might be wrong, but they’re likely to be valuable and a guide to where to look. Experienced security professionals should benefit from Copilot’s ability to produce some answers, but they also need to be prepared to dive in and apply human intelligence to drive to a complete answer.

Possibly the most important thing about Security Copilot is that it’s a defense mechanism to offset how attackers will use AI. Take the example of a phishing email that’s poorly worded but gets through. Most readers will discard the message. But if the attacker uses ChatGPT to refine and improve the text so that it reads like a well-written business communication, the phishing attempt has a better chance of being accepted by the recipient, which might then lead to a successful business email compromise attack.

Many Issues to Work Through

Obviously, there are still a bunch of issues to work through. Even after Microsoft eventually makes Security Copilot available to customers, security analysts will need to figure out how best to use features like the pinboard (to store Copilot results as an investigation develops) and the prompt book, a playbook of automation steps that form the basis for an investigation.

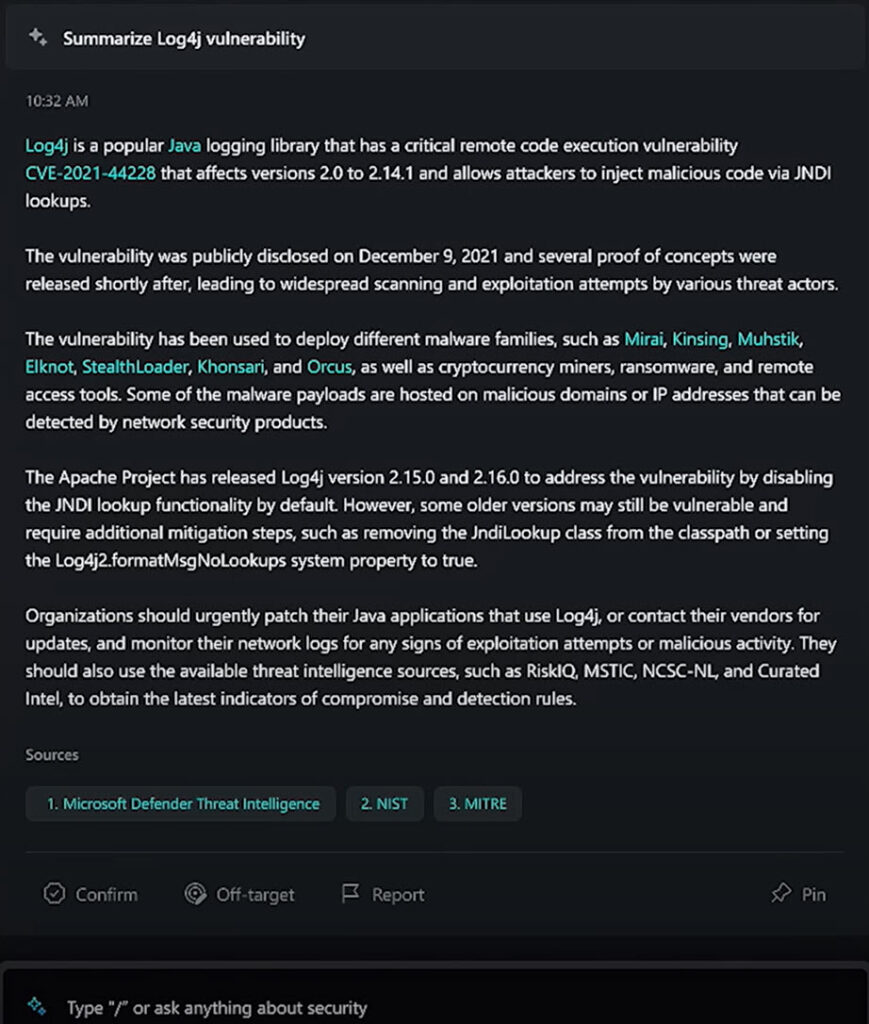

It will take time for humans to understand how best to interact with Security Copilot (Figure 1), how to refine questions and probe Copilot for more information, detect hallucinations (when the AI gets things wrong), and how to integrate Copilot into the processes and procedures that already exist within the organization.

Integrating Copilot into day-to-day operations is especially true for environments that span more than the Microsoft Cloud. Like any AI tool, Security Copilot is as good as the data that is available for it to model. If the data comes solely from the Microsoft part of an environment, it may leave gaps that Copilot can’t fill. Extra effort might be necessary to close gaps, such as reducing the number of unmanaged devices.

No Information About Availability and Cost

Microsoft is testing Security Copilot with some carefully selected customers. No information is available about pricing or when Security Copilot might be generally available. Given the breadth of customers using the Microsoft Cloud, from the largest enterprises to classic mom-and-pop businesses, it will be interesting to see if Microsoft sets any prerequisites for Security Pilot, such as a minimum number of seats or the requirement for other products like Microsoft Sentinel. The truth is that we don’t know much about how the promise will be achieved in a customer environment. No doubt, all will be revealed in time.